Technology has repeatedly revolutionized the way the world works, from Alexander Graham Bell’s invention of the telephone in 1876, to the launch of Apple’s first iPhone in 2007, to the AI boom of the last decade. Now, as AI enters the mainstream, companies are racing to adopt it in both their own offerings and internal tools to boost their teams’ efficiency and productivity. Those who opt out risk being left behind in the next great transformation.

For designers, this wave of AI adoption means more resources and investment given to them to create exceptional AI experiences. For enterprise products, AI design is more than just building an intelligent assistant. Successful AI design requires thinking about two very different audiences: end users and governance stakeholders. These two audiences can often oppose each other, where one pushes for control and security, while the other wants speed and clarity.

In this article, we’ll explore the risks involved with ignoring one side and how to design experiences that balance usability with governance so both users and stakeholders can trust and adopt your AI product.

When designing AI products, we often tend to focus only on the end user. This is the person interacting with the product, typing prompts, asking questions, or reviewing AI-generated insights. But there is another audience that designers need to consider equally: governance stakeholders.

These are the people in an organization responsible for risk, security, legal compliance, data policies, and operational oversight. They might not use the AI product day-to-day, but they influence or control whether it gets approved, scaled, or shut down entirely.

End users care about whether the product helps them do their job better or faster. They may define success by asking questions like:

Their priorities include usability, clarity, and speed.

Governance stakeholders, on the other hand, aren’t the ones interacting with the product’s UI. They care about whether the tool is safe, compliant, and transparent. They may ask questions like:

They’re responsible for ensuring the product handles data safely, remains compliant, and doesn’t introduce risks that could lead to security, legal, or reputational issues.

These two audiences have very different goals and definitions of success, but both need to be addressed when making design decisions.

When designers ignore the end users or governance stakeholders in the design process, this can lead to serious risks. It’s not enough for an AI product to be delightful but untrustworthy, or secure but unintuitive. If one side is neglected, the entire product can fail.

At a previous company, our team had built an internal AI assistant designed to help quickly synthesize research insights. The tool was useful for our team, as we could ask it questions like “What are the top risks in our industry this quarter?” and it would generate summaries sourced directly from our own reports and research notes.

But while the interface was intuitive and the performance was quick, we hadn’t brought in legal or IT to review the tool from a compliance standpoint. The AI was surfacing content from a mix of internal and external sources, which included confidential materials. We didn’t have clear policies around data permissions or visibility into what content was being used in responses. One day, someone noticed the tool surfaced details from a restricted internal strategy document. That triggered immediate concerns around data exposure.

As a result, legal halted the rollout of the tool and IT began to audit. In the end, our organization didn’t adopt the tool due to poor oversight, which led to trust fading. This is a clear lesson that great UX without governance is not enough when it comes to designing enterprise AI products. We should have involved governance stakeholders early on in the design process to understand their concerns, their goals, and the risks of getting it wrong.

Designers may view governance as a restriction or limitation to their creative freedom. It can be seen as a blocker that slows things down or adds friction to otherwise delightful experiences. But in reality, governance is just another user need and can be seen as an opportunity for designers to flex their creative skills. Just like we design for usability, we can design for security, transparency, and responsible AI usage. The goal is to make safe behavior intuitive, obvious, and effortless. Here are a few ways to turn governance requirements into opportunities to create a good user experience.

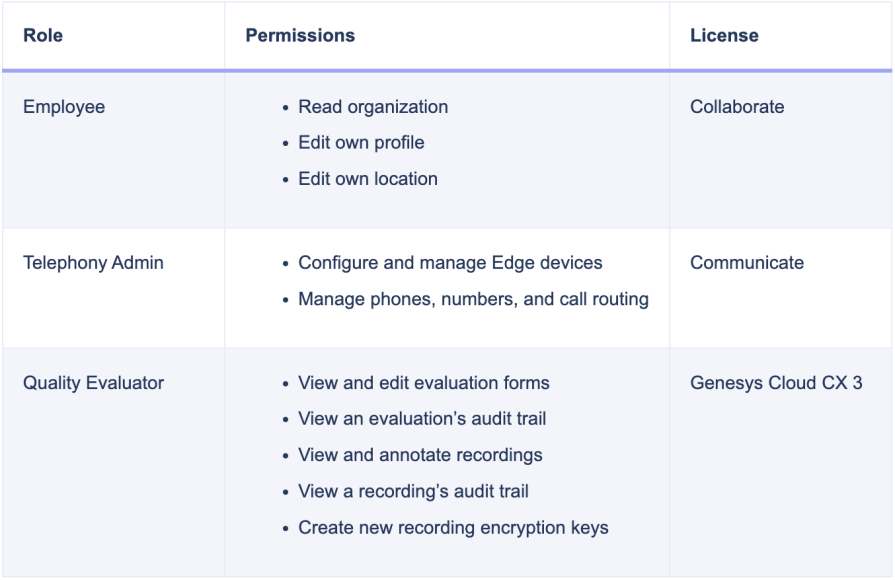

When it comes to access control, many enterprise tools keep permissions in admin panels or expect users to interpret complex role settings. But this can be reimagined with better UX. One example is to have smart defaults that automatically assign appropriate permissions based on a user’s role or department. This can reduce the need for manual setup.

Another way to improve the permissions experience is to make roles and access levels visible to users. Give users immediate context around what they can and cannot access, and why. Instead of using vague labels like “Editor” or “Viewer”, AI tools can be more descriptive in terms of describing what the user can do. For example, a label that says “You can view a recording’s audit trail” describes specifically what the user can do.

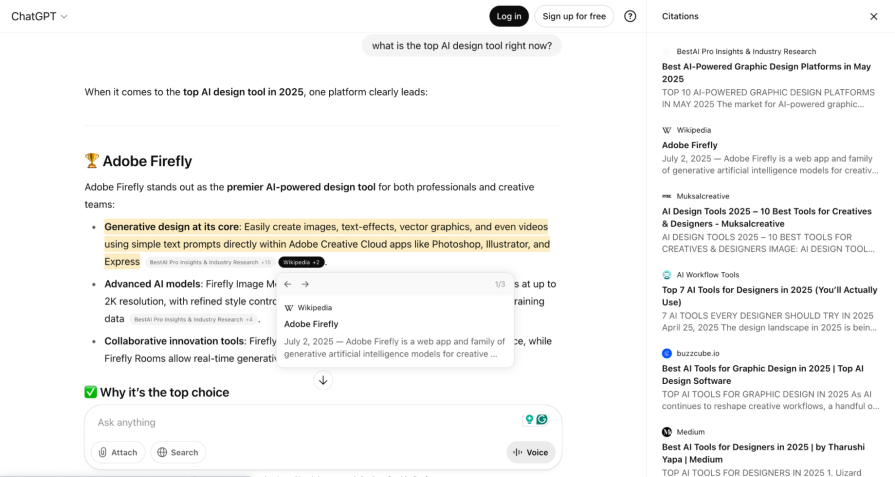

AI systems come with uncertainties, which can make both users and stakeholders hesitant to adopt a new AI product. AI transparency helps build trust by explaining things like what data the system is trained on, where it sourced its answers, or why the model made a certain recommendation.

Designers can surface this context in several different ways. You can include inline explanations, such as “This summary was generated from 3 sources: A, B, and C” with hyperlinks to each source. This can help build user confidence in the responses as they can easily verify the information from the source.

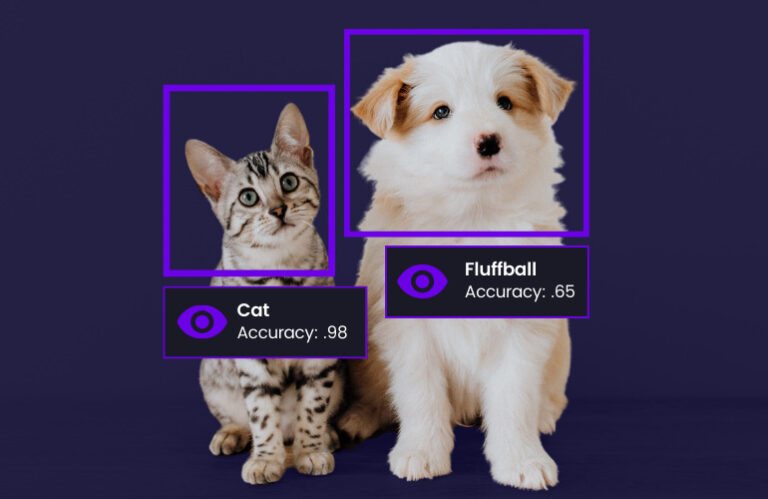

Another solution could be using model confidence indicators, such as low, medium, or high certainty scores or callouts. Sometimes AI models can “hallucinate” or generate inaccurate or fabricated responses, which may seem plausible or realistic. By including a confidence score along with the AI-generated content, users are reminded that AI models are not always right and to do their own fact-checking before relying on the AI output.

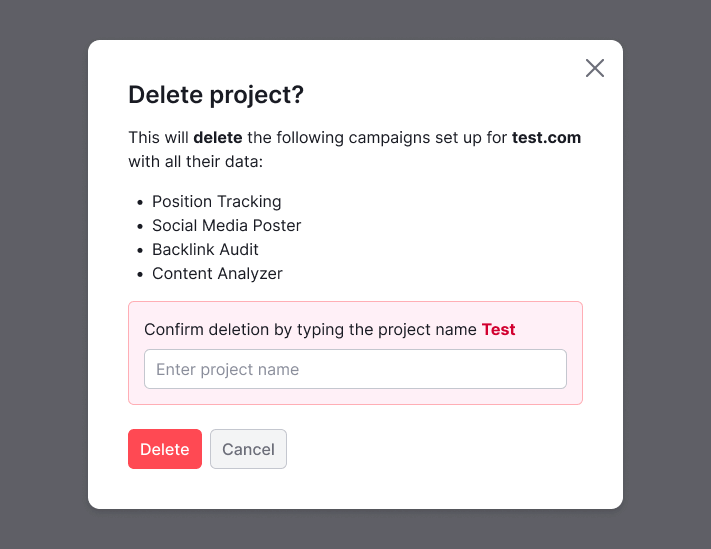

Finally, if the AI tool triggers sensitive actions, such as sharing client data, it can be helpful to add some friction in the form of tooltips or modals that inform the user of the potential consequences. It’s always better to prompt the user about the potential risks of performing an action and empower them to make informed decisions. Depending on the use case, blocking users from making their own decisions can lead to confusion, frustration, and decreased satisfaction with the product.

Too often, governance concerns surface late in the development process. Having to do rework right before a launch because of a missed requirement can become an expensive mistake. A better approach is to bring governance stakeholders into the process from day one, just like you would with engineers or product managers. This way, their needs are integrated into your designs from the start, which leads to fewer surprises down the road.

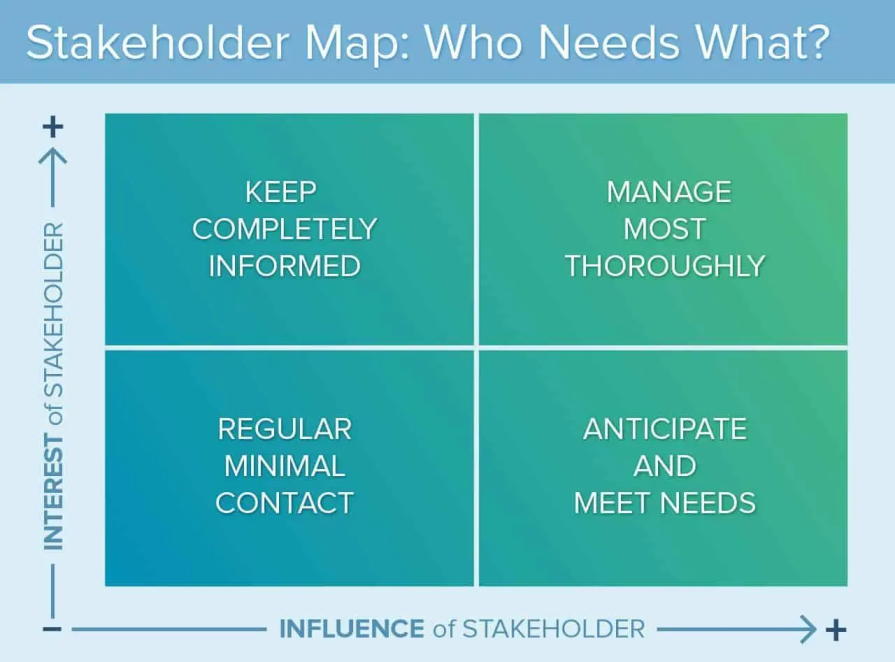

A helpful alignment activity that you can run at the start of the project is a stakeholder mapping workshop. This allows the team to identify who is involved in the project, directly or indirectly, and clarify their roles, priorities, and concerns. Some examples of stakeholders can include end users, legal, IT, or government organizations. Based on each stakeholder’s level of influence, you can determine how and when to engage with them throughout the project.

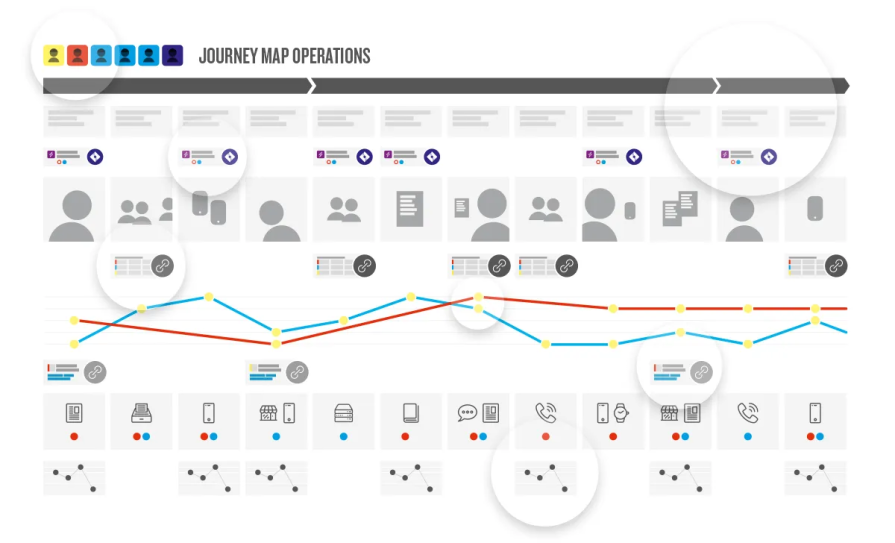

Once you’ve identified your key players, the next step is to map out the user journey. Rather than focusing solely on the end user, overlay their journey with the governance stakeholders’ journey. Throughout the journey, probe stakeholders with questions like:

This will help reveal where needs intersect and the friction points to pay close attention to. Look for flows where the risks of error or misunderstanding are higher, such as data handling or AI recommendations. For example, users may expect instant answers based on data, but governance stakeholders require data to be masked or restricted depending on the sensitivity level. Users may expect personalized recommendations, but governance stakeholders need explanations for how those outputs were generated and whether they meet compliance standards.

After the journey is mapped out, collaborate with your team to define and prioritize requirements from both the user and governance sides. This creates a shared understanding of what needs to be completed for the product to launch successfully. By asking these questions early and designing with both sides in mind, you reduce risk, avoid last-minute blockers, and build a product that’s not only intuitive to use but also trustworthy, scalable, and compliant.

Then, start to layer those requirements into your designs. This can look like using confirmation dialogs for sensitive actions, tooltips that explain the model’s confidence level, or visible audit trails that track who did what and when. Adding these thoughtful elements to your design can make your users feel informed and in control when using the AI tool.

Designing great AI products means designing for two audiences: end users and governance stakeholders. End users care about speed, clarity, and integration into their existing workflow, while governance stakeholders focus on data security, auditability, and policy compliance. When either audience is ignored, this can lead to failed rollouts, low adoption, or serious risk exposure.

If you’re working on an AI product, be intentional about identifying and engaging with relevant stakeholders throughout your design process. Involve governance stakeholders early on so that their needs and requirements aren’t missed, and let you find creative ways to address them within the user experience.

Governance is essential, not a barrier, to a good user experience. It doesn’t have to restrict your creative process. Mapping out the user journey can help you visualize where governance and user needs intersect. When done thoughtfully, it enables you to design experiences that build trust and confidence in your users, making them feel informed and in control when using your AI tool. For AI products to succeed, digital safety is not optional.

LogRocket's Galileo AI watches sessions and understands user feedback for you, automating the most time-intensive parts of your job and giving you more time to focus on great design.

See how design choices, interactions, and issues affect your users — get a demo of LogRocket today.

2FA failures shouldn’t mean permanent lockout. This guide breaks down recovery methods, failure handling, progressive disclosure, and UX strategies to balance security with accessibility.

Two-factor authentication should be secure, but it shouldn’t frustrate users. This guide explores standard 2FA user flow patterns for SMS, TOTP, and biometrics, along with edge cases, recovery strategies, and UX best practices.

2FA has evolved far beyond simple SMS codes. This guide explores authentication methods, UX flows, recovery strategies, and how to design secure, frictionless two-factor systems.

Designing for background jobs means designing for uncertainty. Learn how to expose job states, communicate progress meaningfully, handle mixed outcomes, and test async workflows under real-world conditions.